Invoice Optical Character Recognition (OCR)

This paper investigated the performance of OCR tools in extracting relevant information from invoices. Three OCR tools were assessed, Tesseract, Google Cloud Vision and Microsoft Azure Computer Vision with fifteen invoices being parsed by each. The code for the project was in python, interactions for each tool were made using their respective API’s.

The code for the project is available on Github

Intro to OCR

The functions for document understanding have been packaged under the machine learning field of optical character recognition (OCR). Aiming to replicate the human ability of reading, the use of an optical mechanism with OCR technology automatically recognises characters. The following figure displays the process outlined in the research of OCR systems authored by Mitheet al. (2013).

Segmentation

Once an image is passed into the system segmentation takes place. Segmentation is carried out on the following three levels: line by line, word by word level and character by character. The visualisation of the different levels of segmentation is shown by bounding boxes around the lines, words and characters detected in the input image.

Preprocessing

There are numerous preprocessing techniques, however, the most useful for invoices are: Binarization were images are converted to only consist of white and black pixels (Jyotsna et al., 2016). Skew correction includes re-alignment of the input image to assure the text is horizontal (Shai and Sid-Ahmed, 2014). Thinning and skeletonization are necessary when the image includes handwritten text, the variance in stroke width is removed for increased readability (Abu-Ain et al., 2013). Lastly, language identification and punctuation correction occur during pre-processing.

Feature extraction

Aas and Eikvil (1996), outline that the identification of all symbols and exclusion of unimportant attributes occur during this step. Examples of the information collected include lines, open spaces and intersections. The characters of the image are mapped to a higher degree by extracting special characteristics of the image such as the texts geometric position.

Recognition

Recognition identities the characters across the lines through a word by word sequence. The level of agreement across multiple contributing factors of the machine learning algorithm used is aggregated into what is known as the confidence level (Wang, Lu and Li, 2018). This information is combined in a selected format and outputted to the user.

Accuracy Measurements for OCR

There is a lack of resources publicly available for measuring the accuracy of OCR systems. With the majority of algorithms being patented by large technology companies. The most promising of which is held by Withum, Oxman and Kopchik (2006). Titled `modified Levenshtein distance algorithm for coding', the patent outlines the use of confidence intervals and Levenshtein Distance as to determine an OCR engines accuracy. Following the variables listed in the patent, the individual elements of the accuracy measurements are examined.

About the Data

The invoices used to test the OCR systems include a sample of ten PDF and five written. Invoices have been numbered from 1-10 are in PDF format with 11-15 being in written format. The sample was comprised of real invoices from European companies including hospitality, telecommunications and aviation. The core of the invoices was in English with information such as company names and addresses varying languages.

Bounding Boxes

Bounding boxes visualise the geometric features extracted from an OCR tool (Mithe et al., 2013). Carried out during the segmentation step, it makes identifying a systems shortfalls easy to spot as words or characters that have not been processed will not plot a bounding box.

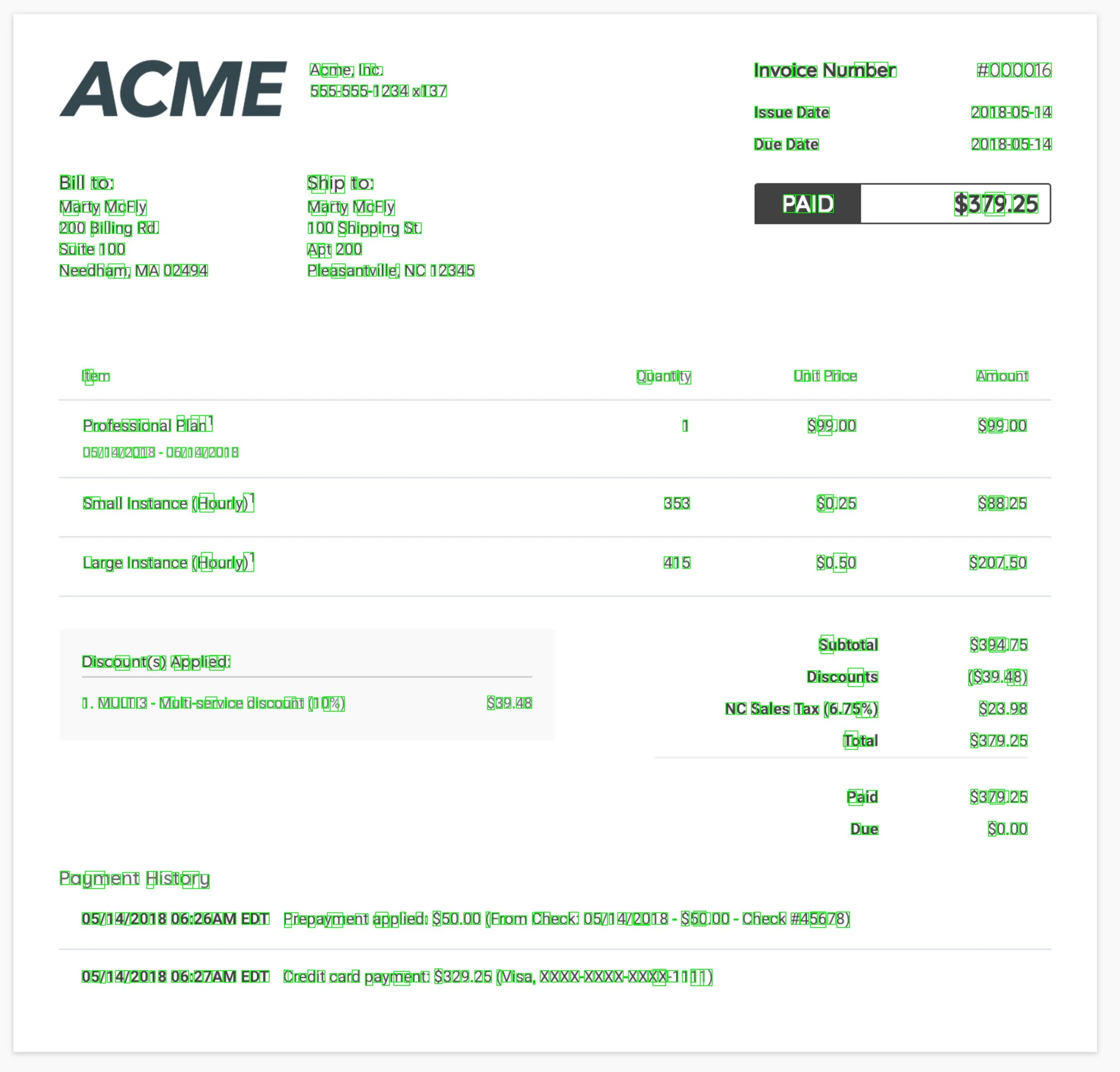

Invoice Layout

Section A typically stored the company logo, name and address.

Section B held the date and invoice number.

Section C was the most important in testing OCR systems ability to extract multiple item names and prices correctly.

Section D usually included the contact details and banking details of the company.

Tesseract

The Tesseract tool has successfully displayed all relevant geometric features. No additional tuning parameters were required. This seems promising for an invoice OCR system that is open-source.

Google Cloud Vision

The OCR offering from Google allows for a more granular visualisation of how the tool identifies bounding boxes. The blue outline represents a section and the green represents words

Azure Computer Vision

Microsofts OCR tool performed with the lest accuracy. Leaving key values unbounded which means they will not be present in the text output covered in the following section. This can be corrected by tuning parameters of the tool. However, the original output is kept in order to maintain a fair test.

Confidence Score

The purpose of a confidence score is to determine how successful a function carried out by a machine learning model has been (Talburt and Zhou, 2015). Various fields are given a score namely language, character and word identification. Each prediction generated by the OCR engine is assigned a score with only the highest score being included in the text output of the system.

Average Confidence Score

Tesseract: 0.866

Google Vision: 0.959.

Azure Vision: 0.849

Google Vision performed well consistently, with scores above 0.9. On the other hand, Tesseract and Azure Vision experience a significant drop in confidence score when processing written invoices (10-15).

Assuming there is a positive relationship between confidence levels and the performance of the system. Therefore, it is expected that Google Vision will have the best Levenshtein Distance score, followed by Tesseract then Azure Vision. Only Google Vision is expected to perform consistanly well for written invoices.

Levenshtein Distance

Levenshtein Distance is the most prominent accuracy instrument that is used to assess outputs in a text format. The instrument measures the `distance' between two strings a and b by counting the number of edits required to transform string a into string b (Levenshtein, 1965). Hardesty (2015) of MIT, stated that despite the algorithm being over 50 years old, experts have determined it to be as good as it gets".

a = string 1, b = string 2, i = terminal character position of string 1, j = terminal character position of string 2. For the condition ai 6= bj : ai = the character of string a at position i, bj = the character of string b at position j

The three minimum rows in the above formula correspond deletion, insertion and substitution of a character. The 'levenshtein' python module replicates the functions of the formula

The format to calculate the number of edits required for string a to match string b is:

lev_distance(string_a, string_b)Average Levenshtein Distance:

Tesseract: 101

Google Vision: 133

Azure Vision: 964

The Tesseract system achieved indicates that the assumption of a positive relationship between confidence levels and Levenshtein distance does not hold. Tesseract outperformed Google Vision although having a lower average Confidence score.

Azure Vision performed very poorly in comparison to the other systems. The most likely factor attributing to Azure Vision’s high Levenshtein distance is the engines failure to parse the invoices text in a line by line sequence respective to the invoices horizontal position. Line by line parsing is crucial for an OCR tool to correctly extracting key text values from invoices due to the keyword and value layout.

Keyword Accuracy

Using the method outlined in section 3.5, the following graph is plotted to assess the OCR tools effectiveness in identifying the keywords.

Firstly the number of edits required for the invoice text to match the keywords is calculated:

keyword_distance = lev_distance(Invoice_text, Keyword_text)Followed by the number of edits required for the text extracted from each OCR system to match the keywords:

OCR_distance = lev_distance(OCR_text, Keyword_text)Finally, the number of extra edits required for the OCR text to match the keywords compared to the number edits required for the invoice text to match the keywords is calculated:

Key_distance = OCR_distance - keyword_distanceAverage Keyword Distance

Tesseract: 30

Google Vision: 20

Azure Vision: 106

Google Vision has correctly identified all keywords for four invoices with zero edits required. Azure Vision has performed substantially better with Keyword Distance rather than in Levenshtein distance. The keywords were identified by the Microsoft Azure during the bounding box stage, however, the system received an immensely large score for Levenshtein Distance. The keyword distance measure may be perceived as a more suitable for accuracy tool in regards to assessing invoice OCR systems.

Results

The proposed accuracy metric of keyword distance performed best at testing for an OCR system for invoice data extraction. Although being the industry standard for measuring accuracy, the Levenshtein Distance did not correctly represent an OCR systems ability to capture the most important values for invoices. Tesseract performed well for an open-source system with the possibility of being a great invoice OCR tool with some parameter tuning. However, Google Vision was a more complete offering with high accuracy results in the most challenging of scenarios. The flexibility of the tool from its ability to successfully capturing alternative languages and written invoices without and additional preprocessing. The least suitable OCR system investigated is the Azure Vision tool with extremely low accuracy scores due to the systems line parsing mechanism. This shortcoming may be amendable through additional hard-coding. However, as we are testing the OCR systems at their face value, Azure Vision may not be a suitable OCR tool for invoice parsing.